Whatever the domestic repercussions of Robert S. Mueller III’s report, Moscow will likely continue its campaign of disinformation and disruption against American democracy, a prominent analyst tells the New York Times:

Whatever the domestic repercussions of Robert S. Mueller III’s report, Moscow will likely continue its campaign of disinformation and disruption against American democracy, a prominent analyst tells the New York Times:

Aleksandr Morozov, a frequent Kremlin critic, noted that the report confirmed Russian interference in the election. The Kremlin can hope to either reset relations, wait until the damage fades or continue with business as usual, said Mr. Morozov, a researcher at the Boris Nemtsov Academic Center for the Study of Russia.

“It is difficult to imagine that relations can get better now,” he said. “So that means that the Kremlin will try to continue with the hybrid war and also attempt to push this event out of the Russian-American agenda with the help of some new ones.”

“It is difficult to imagine that relations can get better now,” he said. “So that means that the Kremlin will try to continue with the hybrid war and also attempt to push this event out of the Russian-American agenda with the help of some new ones.”

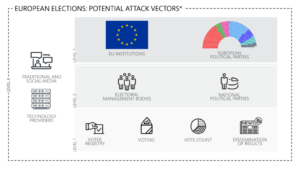

The European Commission predicts the Kremlin’s disinformation operations in the run-up to this May’s parliamentary elections will be “systematic, well-resourced and on a different scale to other countries,” Bloomberg reports:

To minimize the threat, the Commission has more than doubled its spending on counter-disinformation, to 5 million euros this year, and is enlarging its staff of analysts dedicated to tracking disinformation. But Russia’s Internet Research Agency alone has a budget more than double that of all EU counter-disinformation agencies combined. And that doesn’t include the 1.4 billion euros the Russian government spends annually on RT and other mass-media outlets that amplify Kremlin propaganda.

Credit: Microsoft/Belfer Center

Some European leaders still see their continent as the last defender of democracy and multilateralism. Few, unfortunately, are ready to unite to take up that fight, writes Sylvie Kauffmann, the editorial director and a former editor in chief of Le Monde,

The EU is reportedly set to produce a report on “the lessons learnt” in the field of disinformation.

Behind the scenes, as the online hate speech problem worsens, some techies are starting to suggest that instead of just reaching for delete, it’s time to focus on another set of tactics beginning with “D”: demotion, dilution, delay and diversion, writes FT analyst Gillian Tett:

This may sound odd, but the idea was laid out well a couple of weeks ago in a powerful essay by Nathaniel Persily, a law professor at Stanford University, who wrote it to frame the work of the Kofi Annan Commission. …..Another possible tactic is diversion/dilution. Consider the “Redirect” project established by Jigsaw, a division of Google. Three years ago, it set out to identify the key words used by internet users to search for Isis recruitment videos – and then introduced algorithms that redirected those users to anti-Isis content. “Over the course of eight weeks, 320,000 individuals watched over half a million minutes of the 116 videos we selected to refute Isis’s recruiting themes,” the Redirect website explains.

Building democratic resilience against disinformation and populism requires additional awareness from policy makers, a recent report suggests. Civil society groups also have a vital role to play.

For example, StopFake is more than a fact-checking project. It is an analytical hub, which has a comprehensive approach to the problems of propaganda and disinformation, says Yevhen Fedchenko, StopFake editor-in-chief and director of the Mohyla School of Journalism:

For example, StopFake is more than a fact-checking project. It is an analytical hub, which has a comprehensive approach to the problems of propaganda and disinformation, says Yevhen Fedchenko, StopFake editor-in-chief and director of the Mohyla School of Journalism:

We are engaged in analytical work, monitoring and research, and the development of new approaches in media education. In fact, we created a trend of using fact-checking to test foreign disinformation, collected irrefutable evidence of the existence of a complex Russian disinformation system and raised awareness about this problem at the international level.

Vladimir Putin has signed two new censorship laws in Russia, one of which explicitly targets “fake news,” Coda Story reports. Ironically, the Kremlin is using European anti-fake news laws to justify its own crackdown — laws whose creation Russia helped justify in the first place.

Vladimir Putin has signed two new censorship laws in Russia, one of which explicitly targets “fake news,” Coda Story reports. Ironically, the Kremlin is using European anti-fake news laws to justify its own crackdown — laws whose creation Russia helped justify in the first place.

Given the disagreements among European governments about how aggressively to confront Russia, the Commission may find it difficult to appreciably boost funding for counter-disinformation efforts. But it should at least give more resources to two EU bodies, the East StratCom Task Force and the EU Hybrid Fusion Cell, which monitor fake news and coordinate governments’ responses, Bloomberg adds:

- The Commission should also push member states to sign on to its existing proposal to create a pan-European Rapid Alert System that would expose suspicious social media activity close to elections.

- And it should maintain pressure on social media companies to take down bot accounts and disclose the funding sources behind political ads on their platforms.

European governments should also do more to help their citizens distinguish fact from fiction. They can, for example, support fact-checking sites such as Lithuania’s Debunk.eu, a collaboration among journalists, civil society groups and the military.

European governments should also do more to help their citizens distinguish fact from fiction. They can, for example, support fact-checking sites such as Lithuania’s Debunk.eu, a collaboration among journalists, civil society groups and the military.- And they can bolster digital-media literacy instruction in public schools, as Sweden has. Estonia, a country with a substantial minority of Russian-speakers, has gone so far as to create its own Russian-language public broadcasting channel as an alternative to Kremlin-backed media.

Digital Resilience in Latin America: Automation, Disinformation, and Polarization in Elections

Source: Medium

2018 saw political tides turn in three of Latin America’s largest democracies, notes the Atlantic Council’s Digital Forensic Research Lab (DFRLab). These elections also saw deep polarization and distrust in institutions among Brazilians, Mexicans, and Colombians in an information environment ripe with disinformation. And while disinformation and misinformation are nothing new, the spread of false information at alarming rates – facilitated by politicians, non-state actors, or even our own families and friends – are more effective and worrisome than ever. With this trend unlikely to change, how can we detect and combat this borderless phenomenon? What’s next in the fight against disinformation?

DFRLab

Join the Atlantic Council’s Digital Forensic Research Lab (DFRLab) and Adrienne Arsht Latin America Center on Wednesday, March 28, from 9:00 to 11:00 am EST for a glance at polarization, automation and disinformation in Latin America, as well as a look at lessons learned from the region’s 2018 elections.

The event will mark the launch of “Disinformation in Democracies: Strengthening Digital Resilience in Latin America.”

**Speakers:

Katie Harbath, Public Policy Director, Global Elections, Facebook

Carl Woog, Director and Head of Communications, WhatsApp

Francisco Brito Cruz, Director, InternetLab (Brazil)

Andrew Sollinger, Publisher, Foreign Policy, Foreign Policy Group

Gerardo de Icaza, Director of the Department of Electoral Cooperation and Observation, Organization of American States (OAS)

Carlos Cortés, Co-Founder, Linterna Verde (Colombia)

Tania Montalvo, Executive Editor, Animal Político; Coordinator Verificado 2018 (Mexico)

Amaro Grassi, Lead Coordinator, Digital Democracy Room, Department of Public Policy Analysis, Fundação Getulio Vargas (FGV-DAPP) (Brazil)

Graham Brookie, Director and Managing Editor, Digital Forensic Research Lab (DFRLab), Atlantic Council

Roberta Braga, Associate Director, Adrienne Arsht Latin America Center, Atlantic Council

Thursday, March 28, 2019. 9:00 a.m. – 11:00 a.m. EST

Location: Atlantic Council Headquarters

1030 15th St NW, 12th Floor

Washington, DC 20005