Credit: IRI

Western democracies are ill-prepared for the coming wave of “deep fakes” that artificial intelligence could unleash, according to Chris Meserole, a fellow in the Brookings Institution’s Center for Middle East Policy, and Alina Polyakova, the David M. Rubenstein Fellow in Brookings’ Center on the United States and Europe. Deep fakes and the democratization of disinformation will prove challenging for governments and civil society to counter effectively, they write for Foreign Policy:

Because the algorithms that generate the fakes continuously learn how to more effectively replicate the appearance of reality, deep fakes cannot easily be detected by other algorithms — indeed, in the case of generative adversarial networks, the algorithm works by getting really good at fooling itself. To address the democratization of disinformation, governments, civil society, and the technology sector therefore cannot rely on algorithms alone, but will instead need to invest in new models of social verification, too.

“Bots are already yesterday’s medium for spreading disinformation,” said Camille Francois, director of research and analysis at Graphika, addressing the TNW Conference today.

“Bots are already yesterday’s medium for spreading disinformation,” said Camille Francois, director of research and analysis at Graphika, addressing the TNW Conference today.

During the US presidential election, Russian operatives bought dozens of Facebook ads targeted at the Hispanic community seeking to further inflame tensions, according to a USA TODAY analysis. The aim was to stir outrage on both sides, says propaganda expert James Ludes.

“Because at the end of the day, the Russians don’t really care what the policy is, they care about the divisiveness that the issue itself engenders,” says Ludes, vice president for public research and initiatives at Salve Regina University. “They want to amp up the divisiveness and give it as loud a voice as they possibly can.”

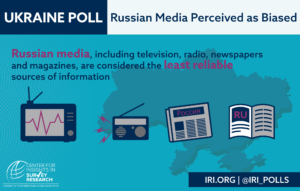

IRI

Polling by the International Republican Institute – a core affiliate of the National Endowment for Democracy – is revealing how disinformation spreads through networks and identifying who is most vulnerable to it, according to IRI’s Katie LaRoque and Josh Solomon, and Bret Barrowman of the Center for Global Impact.

“With this data, we work closely with our partners in Ukraine to counteract the spread of false narratives and strengthen government responses at the national and local level,” they write for IRI’s Democracy Speaks. “This data-driven approach marks the first step in developing effective programming aimed at mitigating the harm of propaganda and defending the information space that is crucial to a free society.”

CIMA’s latest report — A New Wave of Censorship: Distributed Attacks on Expression and Press Freedom – by disinformation expert Daniel Arnaudo of the National Democratic Institute (NDI), analyzes case studies from Ukraine, the Philippines,  Turkey, Bahrain, and China. Arnaudo explains how new digital tactics are changing how we think about censorship with profound implications for global media development.

Turkey, Bahrain, and China. Arnaudo explains how new digital tactics are changing how we think about censorship with profound implications for global media development.

As China ramps up its political warfare ops, check out NDI’s Battle of the Bots,* a discussion with Taiwan’s g0v on using chatbots to counter disinformation.

“European media: Russia has become the West’s last hope,” was the headline in an analytical commentary published by the Russian state-owned news agency RIA Novosti. The problem, however, is that the headline is simply not true, notes StopFake. Manipulative headline writing is a classical disinformation trick: a headline has the power to frame the understanding of the entire article that follows.

Genocide, Nazis and the 2018 FIFA World Cup serve as sources of inspiration for pro-Kremlin disinformation campaigners intent on creating a smokescreen around the violence in Ukraine, the EU East StratCom Task Force DisinfoReview reports:

Genocide, Nazis and the 2018 FIFA World Cup serve as sources of inspiration for pro-Kremlin disinformation campaigners intent on creating a smokescreen around the violence in Ukraine, the EU East StratCom Task Force DisinfoReview reports:

As we have seen before, the increased violence in Donbas – like recently – is preceded and accompanied by an intensified disinformation campaign in pro-Kremlin media. The disinformation campaign presented Ukraine as the main aggressor against Russia and Donbas, stating that Ukraine is to launch a “large-scale offensive in Donbas during the World Cup” to provoke a “humanitarian disaster and an exodus of the population”,

As we have seen before, the increased violence in Donbas – like recently – is preceded and accompanied by an intensified disinformation campaign in pro-Kremlin media. The disinformation campaign presented Ukraine as the main aggressor against Russia and Donbas, stating that Ukraine is to launch a “large-scale offensive in Donbas during the World Cup” to provoke a “humanitarian disaster and an exodus of the population”,

Credit: CSIS

Disinformation is a key element in the authoritarian playbook, which is used (with some variations) in Hungary, Turkey, Venezuela and Russia, says the Washington Post’s Michael Gerson:

Dismantle checks on executive power. Control the criminal-justice system. Scapegoat minority groups. Co-opt mainstream parties. Discredit the independent media. Call for opponents to be jailed. Question the legitimacy of elections. Claim to be the embodied soul of the people.

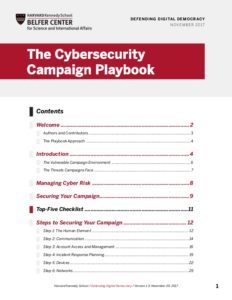

To get ahead of emerging threats, Europe and the United States should consider several policy responses, they Brookings analysts Meserole and Polyakova suggest:

First, the EU and the United States should commit significant funding to research and development at the intersection of AI and information warfare. In April, the European Commission called for at least 20 billion euros (about $23 billion) to be spent on research on AI by 2020, prioritizing the health, agriculture, and transportation sectors. None of the funds are earmarked for research and development specifically on disinformation. ..As long as tech research and counterdisinformation efforts run on parallel, disconnected tracks, little progress will be made in getting ahead of emerging threats.

First, the EU and the United States should commit significant funding to research and development at the intersection of AI and information warfare. In April, the European Commission called for at least 20 billion euros (about $23 billion) to be spent on research on AI by 2020, prioritizing the health, agriculture, and transportation sectors. None of the funds are earmarked for research and development specifically on disinformation. ..As long as tech research and counterdisinformation efforts run on parallel, disconnected tracks, little progress will be made in getting ahead of emerging threats.

- Furthermore, the EU and the U.S. government should also move quickly to prevent the rise of misinformation on decentralized applications. The emergence of decentralized applications presents policymakers with a rare second chance….A model could be the United Nations’ Tech Against Terrorism project, which works closely with small tech companies to help them design their platforms from the ground up to guard against terrorist exploitation.

- Finally, legislators should continue to push for reforms in the digital advertising industry. As AI continues to transform the industry, disinformation content will become more precise and micro-targeted to specific audiences. AI will make it far easier for malicious actors and legitimate advertisers alike to track user behavior online….RTWT

NDI

Taiwan-based gOv is a decentralized civic tech community in Taiwan that advocates for transparency of information and builds bottom-up civic tech solutions for citizens. To combat disinformation on instant messaging platforms, g0v has launched CoFacts, a collaborative fact-checking project that combines a chatbot with a hoax database, integrated within LINE (a popular messenger app in Asia). Disinformation can be more rapidly shared within closed messenger groups such as WhatsApp or LINE than on standard social media platforms, and news organizations and even platform owners find it hard to gain access for moderation or correction.

Speakers

- Min-Hsuan Wu (ttcat) / Deputy CEO of Open Culture Foundation / A hackathon organizer of g0v.twan, and an activist and campaigner of a number of social movements in Taiwan, including the anti-nuclear, environmental, LGBT and Human Rights movements.

- Lulu Keng / Executive Secretary of Open Culture Foundation international program / An experienced project manager skilled in cross-fields collaboration, environmental advocacy, civic movement, and indie music management.

- Hsiao-wei Chiu (Ipa) / g0v Co-founder / Documentary filmmaker, writer, mom of two under three.

- Yutin Liu / g0v contributor / A web developer who provides film record and live-stream technical support for civic movements.

- Aaron Wytze Wilson / Journalist and Graduate Student in Toronto / A journalist based in Toronto, and currently pursuing his master degree at the University of Toronto. Formerly based in Taiwan from 2013 to 2017, and China from 2005 to 2012.

When: Monday, June 4, 2018 12:00-1:30 pm

Where: National Democratic Institute

455 Massachusetts Avenue NW, 20001

8th Floor Board Room

A light lunch will be served. RSVP